The developer landscape has dramatically changed in recent years. It used to be fairly common for us developers to run all of our tools (databases, web servers, development IDEs…) on our own machines, but cloud services such as GitHub, MongoDB Atlas and AWS Lambda are drastically changing the game. They make it increasingly easier for developers to write and run code anywhere and on any device with no (or very few) dependencies.

A few years ago, if you crashed your machine, lost it or simply ran out of power, it would have probably taken you a few days before you got a new machine back up and running with everything you need properly set up and configured the way it previously was.

With developer tools in the cloud, you can now switch from one laptop to another with minimal disruption. However, it doesn’t mean everything is rosy. Writing and debugging code in the cloud is still challenging; as developers, we know that having a local development environment, although more lightweight, is still very valuable.

And that’s exactly what I’ll try to show you in this blog post: how to easily integrate an AWS Lambda Node.js function with a MongoDB database hosted in MongoDB Atlas, the DBaaS (database as a service) for MongoDB. More specifically, we’ll write a simple Lambda function that creates a single document in a collection stored in a MongoDB Atlas database. I’ll guide you through this tutorial step-by-step, and you should be done with it in less than an hour.

Let’s start with the necessary requirements to get you up and running:

- An Amazon Web Services account available with a user having administrative access to the IAM and Lambda services. If you don’t have one yet, sign up for a free AWS account.

- A local machine with Node.js (I told you we wouldn’t get rid of local dev environments so easily…). We will use Mac OS X in the tutorial below but it should be relatively easy to perform the same tasks on Windows or Linux.

- A MongoDB Atlas cluster alive and kicking. If you don’t have one yet, sign up for a free MongoDB Atlas account and create a cluster in just a few clicks. You can even try our M0, free cluster tier, perfect for small-scale development projects!).

Now that you know about the requirements, let’s talk about the specific steps we’ll take to write, test and deploy our Lambda function:

- MongoDB Atlas is by default secure, but as application developers, there are steps we should take to ensure that our app complies with least privilege access best practices. Namely, we’ll fine-tune permissions by creating a MongoDB Atlas database user with only read/write access to our app database.

- We will set up a Node.js project on our local machine, and we’ll make sure we test our lambda code locally end-to-end before deploying it to Amazon Web Services.

- We will then create our AWS Lambda function and upload our Node.js project to initialize it.

- Last but not least, we will make some modifications to our Lambda function to encrypt some sensitive data (such as the MongoDB Atlas connection string) and decrypt it from the function code.

A short note about VPC Peering

I’m not delving into the details of setting up VPC Peering between our MongoDB Atlas cluster and AWS Lambda for 2 reasons: 1) we already have a detailed VPC Peering documentation page and a VPC Peering in Atlas post that I highly recommend and 2) M0 clusters (which I used to build that demo) don’t support VPC Peering.

Here’s what happens if you don’t set up VPC Peering though:

- You will have to add the infamous

0.0.0.0/0CIDR block to your MongoDB Atlas cluster IP Whitelist because you won’t know which IP address AWS Lambda is using to make calls to your Atlas database. - You will be charged for the bandwidth usage between your Lambda function and your Atlas cluster.

If you’re only trying to get this demo code to write, these 2 caveats are probably fine, but if you’re planning to deploy a production-ready Lambda-Atlas integration, setting up VPC Peering is a security best practice we highly recommend. M0 is our current free offering; check out our MongoDB Atlas pricing page for the full range of available instance sizes.

As a reminder, for development environments and low traffic websites, M0, M10 and M20 instance sizes should be fine. However, for production environments that support high traffic applications or large datasets, M30 or larger instances sizes are recommended.

Setting up security in your MongoDB Atlas cluster

Making sure that your application complies with least privilege access policies is crucial to protect your data from nefarious threats. This is why we will set up a specific database user that will only have read/write access to our travel database. Let’s see how to achieve this in MongoDB Atlas:

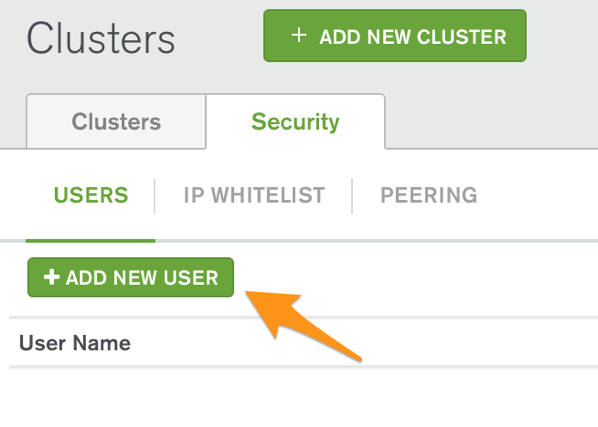

On the Clusters page, select the Security tab, and press the Add New User button

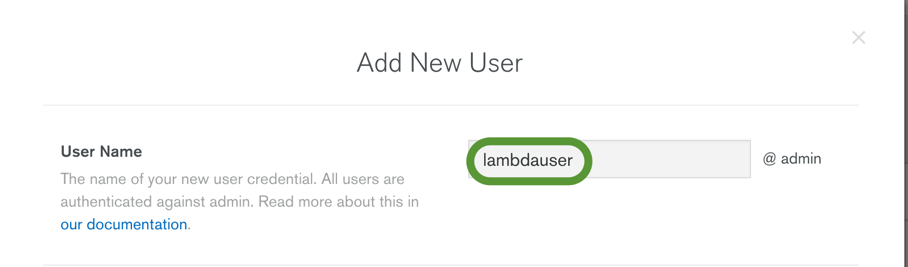

In the pop-up window that opens, add a user name of your choice (such as lambdauser):

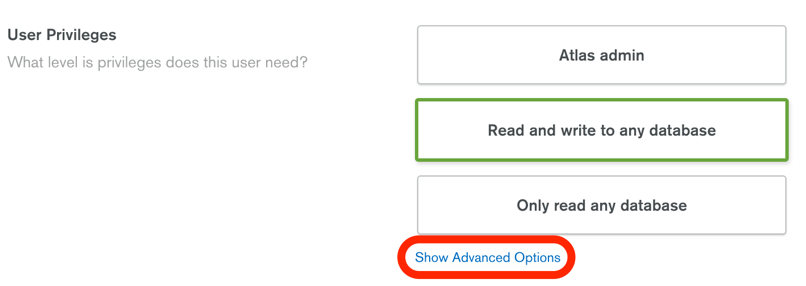

In the User Privileges section, select the Show Advanced Options link. This allows us to assign read/write on a specific database, not any database.

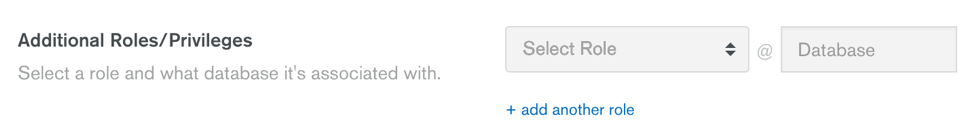

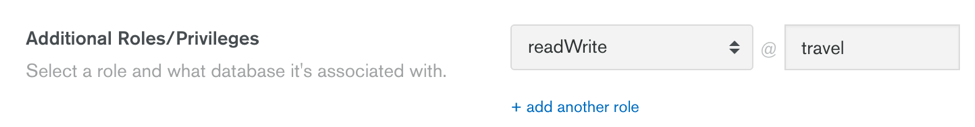

You will then have the option to assign more fine-grained access control privileges:

In the Select Role dropdown list, select readWrite and fill out the Database field with the name of the database you’ll use to store documents. I have chosen to name it travel.

In the Password section, use the Autogenerate Secure Password button (and make a note of the generated password) or set a password of your liking. Then press the Add User button to confirm this user creation.

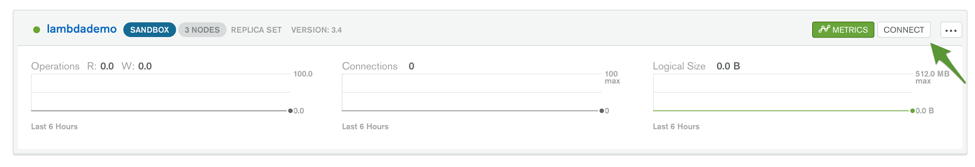

Let’s grab the cluster connection string while we’re at it since we’ll need it to connect to our MongoDB Atlas database in our Lambda code:

Assuming you already created a MongoDB Atlas cluster, press the Connect button next to your cluster:

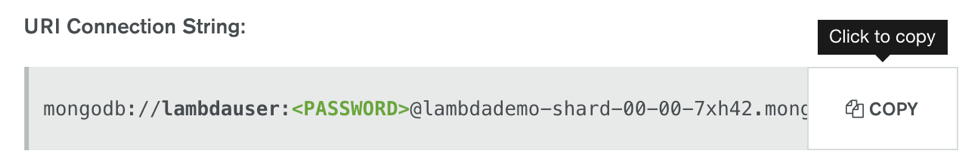

Copy the URI Connection String value and store it safely in a text document. We’ll need it later in our code, along with the password you just set.

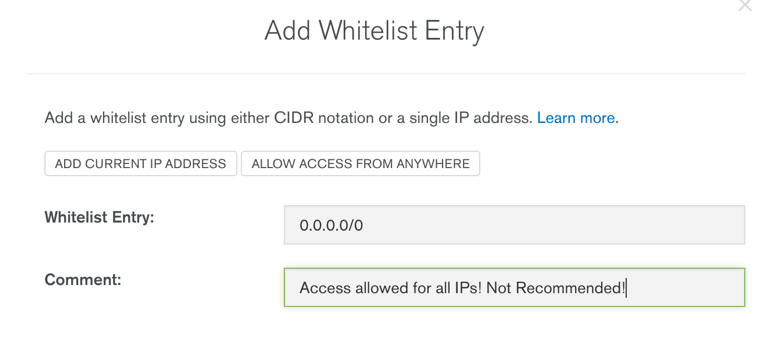

Additionally, if you aren’t using VPC Peering, navigate to the IP Whitelist tab and add the 0.0.0.0/0 CIDR block or press the Allow access from anywhere button. As a reminder, this setting is strongly NOT recommended for production use and potentially leaves your MongoDB Atlas cluster vulnerable to malicious attacks.

Create a local Node.js project

Though Lambda functions are supported in multiple languages, I have chosen to use Node.js thanks to the growing popularity of JavaScript as a versatile programming language and the tremendous success of the MEAN and MERN stacks (acronyms for MongoDB, Express.js, Angular/React, Node.js - check out Andrew Morgan’s excellent developer-focused blog series on this topic). Plus, to be honest, I love the fact it’s an interpreted, lightweight language which doesn’t require heavy development tools and compilers.

Time to write some code now, so let’s go ahead and use Node.js as our language of choice for our Lambda function.

Start by creating a folder such as lambda-atlas-create-doc

mkdir lambda-atlas-create-doc

&& cd lambda-atlas-create-doc

Next, run the following command from a Terminal console to initialize our project with a package.json file

npm init

You’ll be prompted to configure a few fields. I’ll leave them to your creativity but note that I chose to set the entry point to app.js (instead of the default index.js) so you might want to do so as well.

We’ll need to use the MongoDB Node.js driver so that we can connect to our MongoDB database (on Atlas) from our Lambda function, so let’s go ahead and install it by running the following command from our project root:

npm install mongodb --save

We’ll also want to write and test our Lambda function locally to speed up development and ease debugging, since instantiating a lambda function every single time in Amazon Web Services isn’t particularly fast (and debugging is virtually non-existent, unless you’re a fan of the console.log() function). I’ve chosen to use the lambda-local package because it provides support for environment variables (which we’ll use later):

(sudo) npm install lambda-local -g

Create an app.js file. This will be the file that contains our lambda function:

touch app.js

Now that you have imported all of the required dependencies and created the Lambda code file, open the app.js file in your code editor of choice (Atom, Sublime Text, Visual Studio Code…) and initialize it with the following piece of code:

Let’s pause a bit and comment the code above, since you might have noticed a few peculiar constructs:

- The file is written exactly as the Lambda code Amazon Web Services expects (e.g. with an “exports.handler” function). This is because we’re using lambda-local to test our lambda function locally, which conveniently lets us write our code exactly the way AWS Lambda expects it. More about this in a minute.

- We are declaring the MongoDB Node.js driver that will help us connect to and query our MongoDB database.

- Note also that we are declaring a

cachedDbobject OUTSIDE of the handler function. As the name suggests, it's an object that we plan to cache for the duration of the underlying container AWS Lambda instantiates for our function. This allows us to save some precious milliseconds (and even seconds) to create a database connection between Lambda and MongoDB Atlas. For more information, please read my follow-up blog post on how to optimize Lambda performance with MongoDB Atlas. - We are using an environment variable called

MONGODB_ATLAS_CLUSTER_URIto pass the uri connection string of our Atlas database, mainly for security purposes: we obviously don’t want to hardcode this uri in our function code, along with very sensitive information such as the username and password we use. Since AWS Lambda supports environment variables since November 2016 (as the lambda-local NPM package does), we would be remiss not to use them. - The function code looks a bit convoluted with the seemingly useless if-else statement and the processEvent function but it will all become clear when we add decryption routines using AWS Key Management Service (KMS). Indeed, not only do we want to store our MongoDB Atlas connection string in an environment variable, but we also want to encrypt it (using AWS KMS) since it contains highly sensitive data (note that you might incur charges when you use AWS KMS even if you have a free AWS account).

Now that we’re done with the code comments, let’s create an event.json file (in the root project directory) and fill it with the following data:

{

"address" : {

"street" : "2 Avenue",

"zipcode" : "10075",

"building" : "1480",

"coord" : [ -73.9557413, 40.7720266 ]

},

"borough" : "Manhattan",

"cuisine" : "Italian",

"grades" : [

{

"date" : "2014-10-01T00:00:00Z",

"grade" : "A",

"score" : 11

},

{

"date" : "2014-01-16T00:00:00Z",

"grade" : "B",

"score" : 17

}

],

"name" : "Vella",

"restaurant_id" : "41704620"

}

(in case you’re wondering, that JSON file is what we’ll send to MongoDB Atlas to create our BSON document)

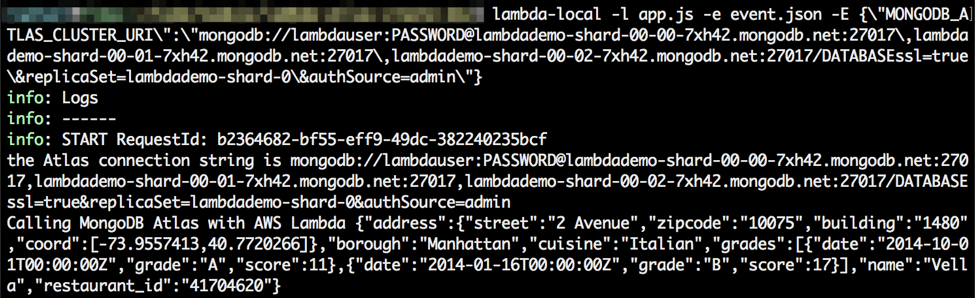

Next, make sure that you’re set up properly by running the following command in a Terminal console:

If you want to test it with your own cluster URI Connection String (as I’m sure you do), don’t forget to escape the double quotes, commas and ampersand characters in the E parameter, otherwise lambda-local will throw an error (you should also replace the $PASSWORD and $DATABASE keywords with your own values).

After you run it locally, you should get the following console output:

If you get an error, check your connection string and the double quotes/commas/ampersand escaping (as noted above).

Now, let’s get down to the meat of our function code by customizing the processEvent() function and adding a createDoc() function:

Note how easy it is to connect to a MongoDB Atlas database and insert a document, as well as the small piece of code I added to translate JSON dates (formatted as ISO-compliant strings) into real JavaScript dates that MongoDB can store as BSON dates.

You might also have noticed my performance optimization comments and the call to context.callbackWaitsForEmptyEventLoop = false. If you're interested in understanding what they mean (and I think you should!), please refer to my follow-up blog post on how to optimize Lambda performance with MongoDB Atlas.

You’re now ready to fully test your Lambda function locally. Use the same lambda-local command as before and hopefully you’ll get a nice “Kudos” success message:

If all went well on your local machine, let’s publish our local Node.js project as a new Lambda function!

Create the Lambda function

The first step we’ll want to take is to zip our Node.js project, since we won’t write the Lambda code function in the Lambda code editor. Instead, we’ll choose the zip upload method to get our code pushed to AWS Lambda.

I’ve used the zip command line tool in a Terminal console, but any method works (as long as you zip the files inside the top folder, not the top folder itself!) :

zip -r archive.zip node_modules/ app.js package.json

Next, sign in to the AWS Console and navigate to the IAM Roles page and create a role (such as LambdaBasicExecRole) with the AWSLambdaBasicExecutionRole permission policy:

Let’s navigate to the AWS Lambda page now. Click on Get Started Now (if you’ve never created a Lambda function) or on the Create a Lambda function button. We’re not going to use any blueprint and won’t configure any trigger either, so select Configure function directly in the left navigation bar:

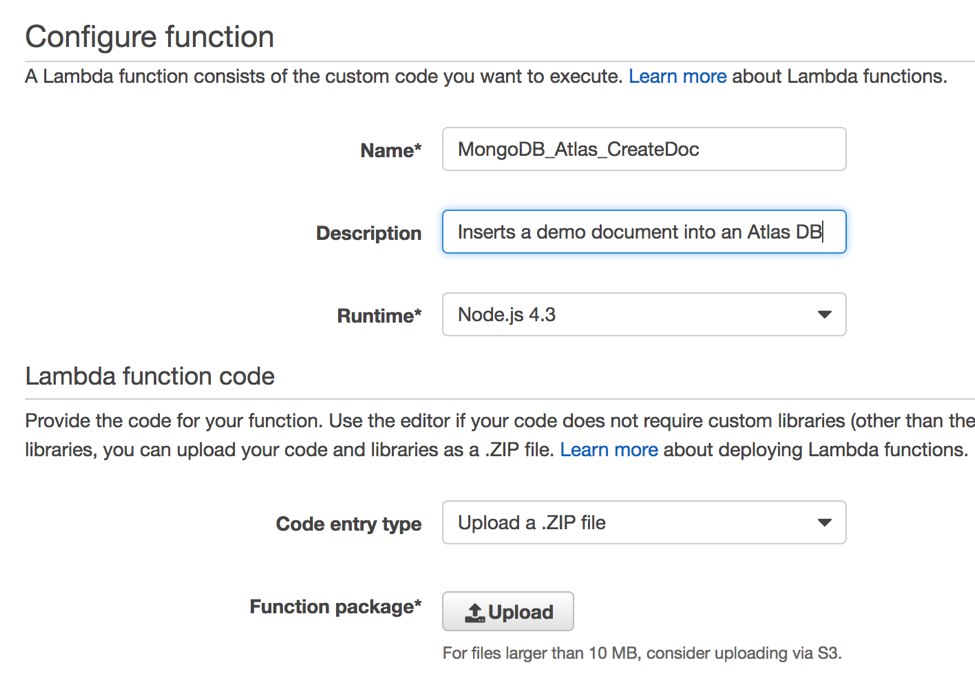

In the Configure function page, enter a Name for your function (such as MongoDB_Atlas_CreateDoc). The runtime is automatically set to Node.js 4.3, which is perfect for us, since that’s the language we’ll use. In the Code entry type list, select Upload a .ZIP file, as shown in the screenshot below:

Click on the Upload button and select the zipped Node.js project file you previously created.

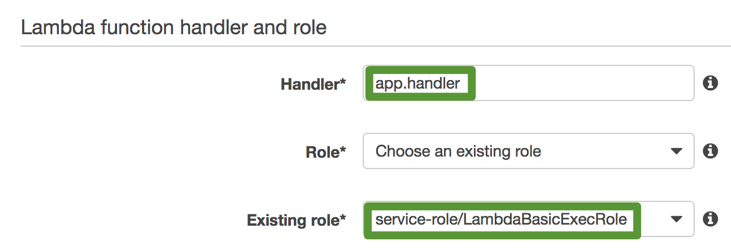

In the Lambda function handler and role section, modify the Handler field value to app.handler (why? here’s a hint: I’ve used an app.js file, not an index.js file for my Lambda function code...) and choose the existing LambdaBasicExecRole role we just created:

In the Advanced Settings section, you might want to increase the Timeout value to 5 or 10 seconds, but that’s always something you can adjust later on. Leave the VPC and KMS key fields to their default value (unless you want to use a VPC and/or a KMS key) and press Next.

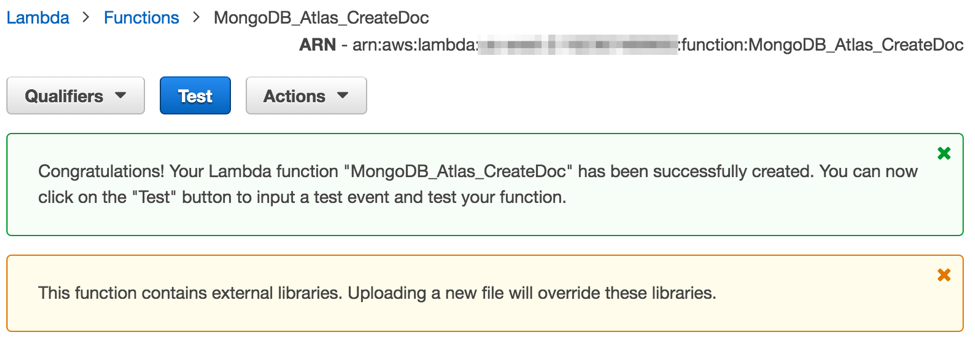

Last, review your Lambda function and press Create function at the bottom. Congratulations, your Lambda function is live and you should see a page similar to the following screenshot:

But do you remember our use of environment variables? Now is the time to configure them and use the AWS Key Management Service to secure them!

Configure and secure your Lambda environment variables

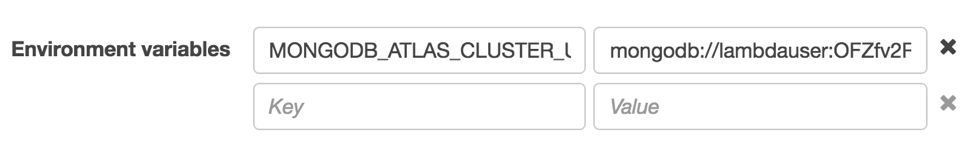

Scroll down in the Code tab of your Lambda function and create an environment variable with the following properties:

| Name | Value |

|---|---|

| MONGODB_ATLAS_CLUSTER_URI | YOUR_ATLAS_CLUSTER_URI_VALUE |

At this point, you could press the Save and test button at the top of the page, but for additional (and recommended) security, we’ll encrypt that connection string.

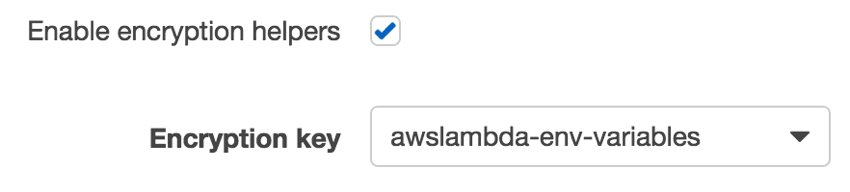

Check the Enable encryption helpers check box and if you already created an encryption key, select it (otherwise, you might have to create one - it’s fairly easy):

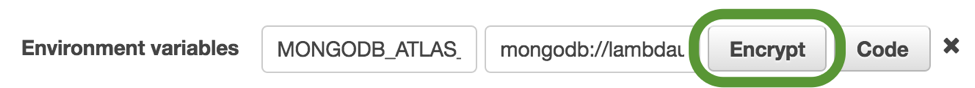

Next, select the Encrypt button for the MONGODB_ATLAS_CLUSTER_URI variable:

Back in the inline code editor, add the following line at the top:

const AWS = require('aws-sdk');

and replace the contents of the “else” statement in the “exports.handler” method with the following code:

(hopefully the convoluted code we originally wrote makes sense now!)

If you want to check the whole function code I’ve used, check out the following Gist. And for the Git fans, the full Node.js project source code is also available on GitHub.

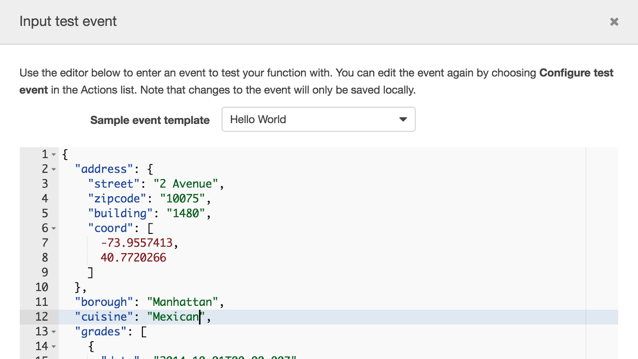

Now press the Save and test button and in the Input test event text editor, paste the content of our event.json file:

Scroll and press the Save and test button.

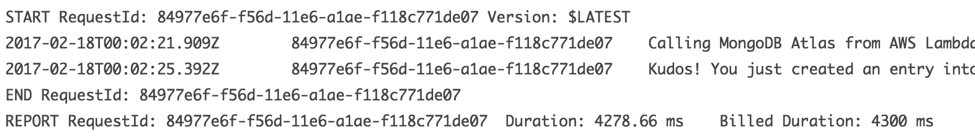

If you configured everything properly, you should receive the following success message in the Lambda Log output:

Kudos! You can savor your success a few minutes before reading on.

What’s next?

I hope this AWS Lambda-MongoDB Atlas integration tutorial provides you with the right steps for getting started in your first Lambda project. You should now be able to write and test a Lambda function locally and store sensitive data (such as your MongoDB Atlas connection string) securely in AWS KMS.

So what can you do next?

- If you don’t have a MongoDB Atlas account yet, it’s not too late to create one!

- If you’re not familiar with the MongoDB Node.js driver, check out our Node.js driver documentation to understand how to make the most of the MongoDB API. Additionally, we also offer an online Node.js course for the Node.js developers who are getting started with MongoDB.

- Learn how to visualize the data you created with your Lambda function, download MongoDB Compass and read Visualizing your data with MongoDB Compass to learn how to connect it to MongoDB Atlas.

- Planning to build a lot of Lambda functions? Learn how to orchestrate them with AWS Step Functions by reading our Integrating MongoDB Atlas, Twilio and AWS Simple Email Service with AWS Step Functions post.

- Learn how to integrate MongoDB and AWS Lambda in a more complex scenario, check out our more advanced blog post: Developing a Facebook Chatbot with AWS Lambda and MongoDB Atlas.

And of course, don’t hesitate to ask us any questions or leave your feedback in a comment below. Happy coding!

Enjoyed this post? Replay our webinar where we have an interactive tutorial on serverless architectures with AWS Lambda.

About the Author - Raphael Londner

Raphael Londner is a Principal Developer Advocate at MongoDB, focused on cloud technologies such as Amazon Web Services, Microsoft Azure and Google Cloud Engine. Previously he was a developer advocate at Okta as well as a startup entrepreneur in the identity management space. You can follow him on Twitter at @rlondner