Many of our customers provide MongoDB as a service to their development teams, where developers can request a MongoDB database instance then receive a connection string and credentials in minutes. As those customers move to MongoDB Atlas, they are similarly interested in providing the same level of timely service to their developers.

While Atlas has a very powerful control plane for provisioning clusters, it’s often too powerful to put directly in the hands of developers. The goal of this article is to show how the Atlas APIs can be used to provide the same MongoDB as a service when MongoDB is managed by Atlas.

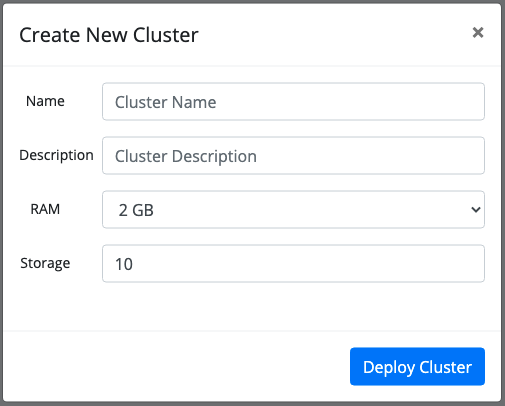

Specifically, we’ll demonstrate how an interface could be created that restricts developers to a limited set of options from which to choose. For simplicity, this example represents how to restrict developers to configure their cluster with a preset list of memory and storage options. Other options like cloud provider and region are abstracted away. However, we also demonstrate how to add labels to the Atlas clusters, which is a feature that the Atlas UI doesn’t support. For example, we’ve added a label for cluster description.

Clik here to view.

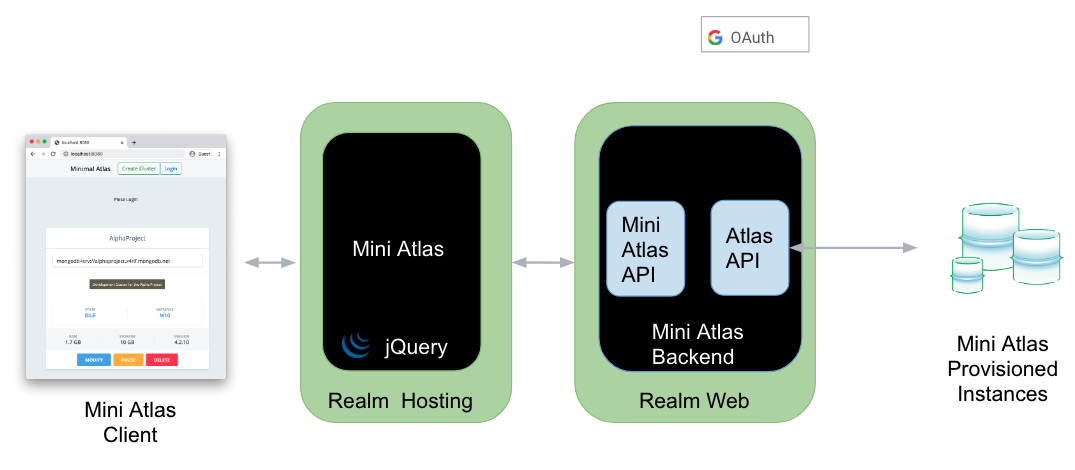

Architecture

Although the Atlas APIs could be called directly from the client interface, we elected to use a 3 tier architecture, the benefits being:

-

We can control the extent of functionality exposed to the front-end developers.

-

We can simplify the APIs exposed to the front-end developers.

-

We can more granulary secure the API endpoints.

-

We could take advantage of other backend features such as Triggers, Twilio integration, etc.

Naturally, we selected Realm to host the middle tier.

Clik here to view.

Implementation

Backend

Clik here to view.

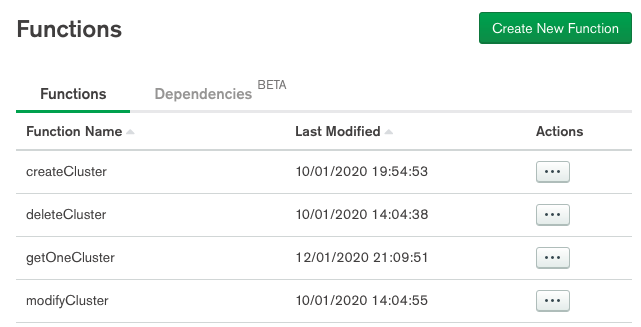

Atlas API

The Atlas APIs are wrapped in a set of Realm Functions.

Clik here to view.

For the most part, they all call the Atlas API as follows (this is getOneCluster):

/*

* Gets information about the requested cluster. If clusterName is empty, all clusters will be fetched.

* See https://docs.atlas.mongodb.com/reference/api/clusters-get-one

*

*/

exports = async function(username, password, projectID, clusterName)

{

const arg = {

scheme: 'https',

host: 'cloud.mongodb.com',

path: 'api/atlas/v1.0/groups/' + projectID +'/clusters/' +

clusterName,

username: username,

password: password,

headers: {'Content-Type': ['application/json'],

'Accept-Encoding': ['bzip, deflate']},

digestAuth:true

};

// The response body is a BSON.Binary object. Parse it and return.

response = await context.http.get(arg);

return EJSON.parse(response.body.text());

};

You can see each function’s source on GitHub.

MiniAtlas API

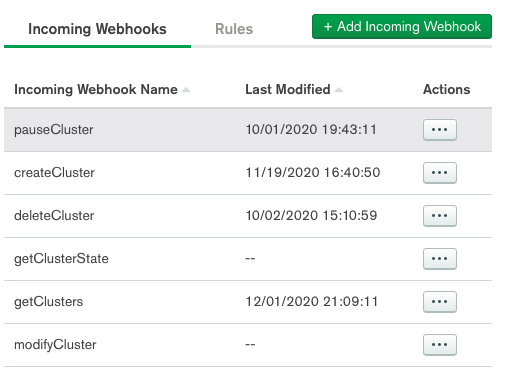

The next step was to expose the functions as endpoints that a frontend could use. Alternatively, we could have called the functions using the Realm Web SDK, but we elected to stick with the more familiar REST protocol for our frontend developers.

Using Realm Third-Party Services, we developed the following 6 endpoints:

| API | Method Type | Endpoint |

|---|---|---|

| Get Clusters | GET | /getClusters |

| Create a Cluster | POST | /getClusters |

| Get Cluster State | GET | /getClusterState?clusterName:cn |

| Modify a Cluster | PATCH | /modifyCluster |

| Pause or Resume Cluster | POST | /pauseCluster |

| Delete a Cluster | DELETE | /deleteCluster?clusterName:cn |

Clik here to view.

Here’s the source for getClusters. Note is pulls the username and password from the Values & Secrets:

/*

* GET getClusters

*

* Query Parameters

*

* None

*

* Response - Currently all values documented at https://docs.atlas.mongodb.com/reference/api/clusters-get-all/

*

*/

exports = async function(payload, response) {

var results = [];

const username = context.values.get("username");

const password = context.values.get("apiKey");

projectID = context.values.get("projectID");

// Sending an empty clusterName will return all clusters.

var clusterName = '';

response = await context.functions.execute("getOneCluster", username, password,

projectID, clusterName);

results = response.results;

return results;

};

You can see each webhook’s source on GitHub.

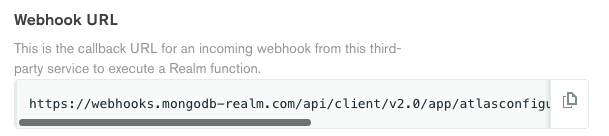

When the webhook is saved, a Webhook URL is generated, which is the endpoint for the API:

Clik here to view.

API Endpoint Security

Only authenticated users can execute the API endpoints. The caller must include an Authorization header containing a valid user id, which the endpoint passes through this script function:

exports = function(payload) {

const headers = context.request.requestHeaders

const { Authorization } = headers

const user_id = Authorization.toString().replace(/^Bearer/, '')

return user_id

};

MongoDB Realm includes several built-in authentication providers including anonymous users, email/password combinations, API keys, and OAuth 2.0 through Facebook, Google, and Apple ID.

For this exercise, we elected to use Google OAuth, primarily because it’s already integrated with our SSO provider here at MongoDB.

Clik here to view.

The choice of provider isn’t important. Whatever provider or providers are enabled will generate an associated user id that can be used to authenticate access to the APIs.

Frontend

Clik here to view.

The frontend is implemented in JQuery and hosted on Realm.

Authentication

The client uses the MongoDB Stitch Browser SDK to prompt the user to log into Google (if not already logged in) and sets the users Google credentials in the StitchAppClient.

let credential = new stitch.GoogleRedirectCredential();

client.auth.loginWithRedirect(credential);

Then, the user Id required to be sent in the API call to the backend can be retrieved from the StitchAppClient as follows:

let userId = client.auth.authInfo.userId;

And set in the header when calling the API. Here’s an example calling the createCluster API:

export const createCluster = (uid, data) => {

let url = `${baseURL}/createCluster`

const params = {

method: "post",

headers: {

"Content-Type": "application/json;charset=utf-8",

...(uid && { Authorization: uid })

},

...(data && { body: JSON.stringify(data) })

}

return fetch(url, params)

.then(handleErrors)

.then(response => response.json())

.catch(error => console.log(error) );

};

You can see all the api calls in webhooks.js.

Tips

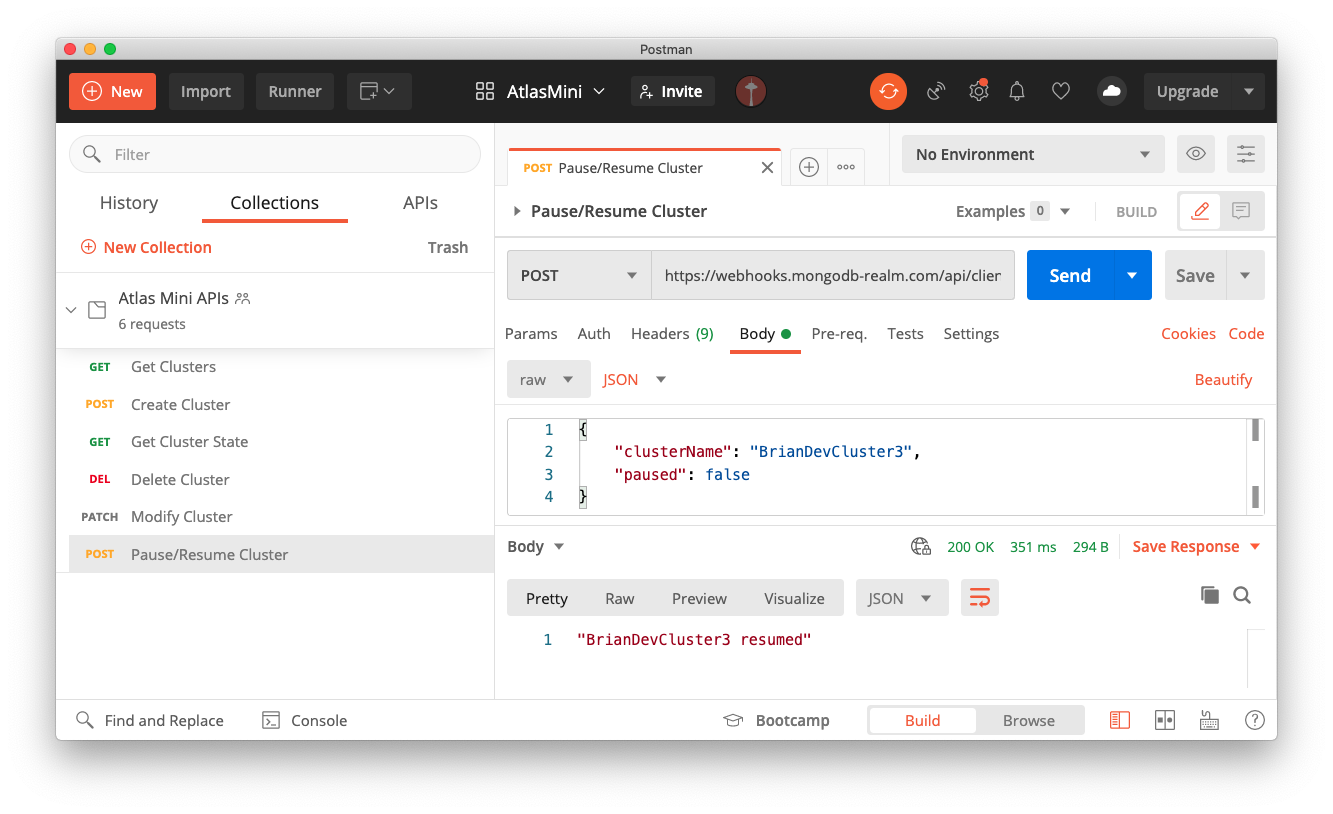

We had great success using Postman Team Workspaces to share and validate the backend APIs.

Clik here to view.

Conclusions

This prototype was created to demonstrate what’s possible, which by now you hopefully realize is anything! The guts of the solution are here - how you wish to extend it is up to you.