Welcome to the final part of our single view blog series

- In Part 1 we reviewed the business drivers behind single view projects, introduced a proven and repeatable 10-step methodology to creating the single view, and discussed the initial “Discovery” stage of the project

- In Part 2 we dove deeper into the methodology by looking at the development and deployment phases of the project

- In this final part, we wrap up with the single view maturity model, look at required database capabilities to support the single view, and present a selection of case studies.

If you want to get started right now, download the complete 10-Step Methodology to Creating a Single View whitepaper.

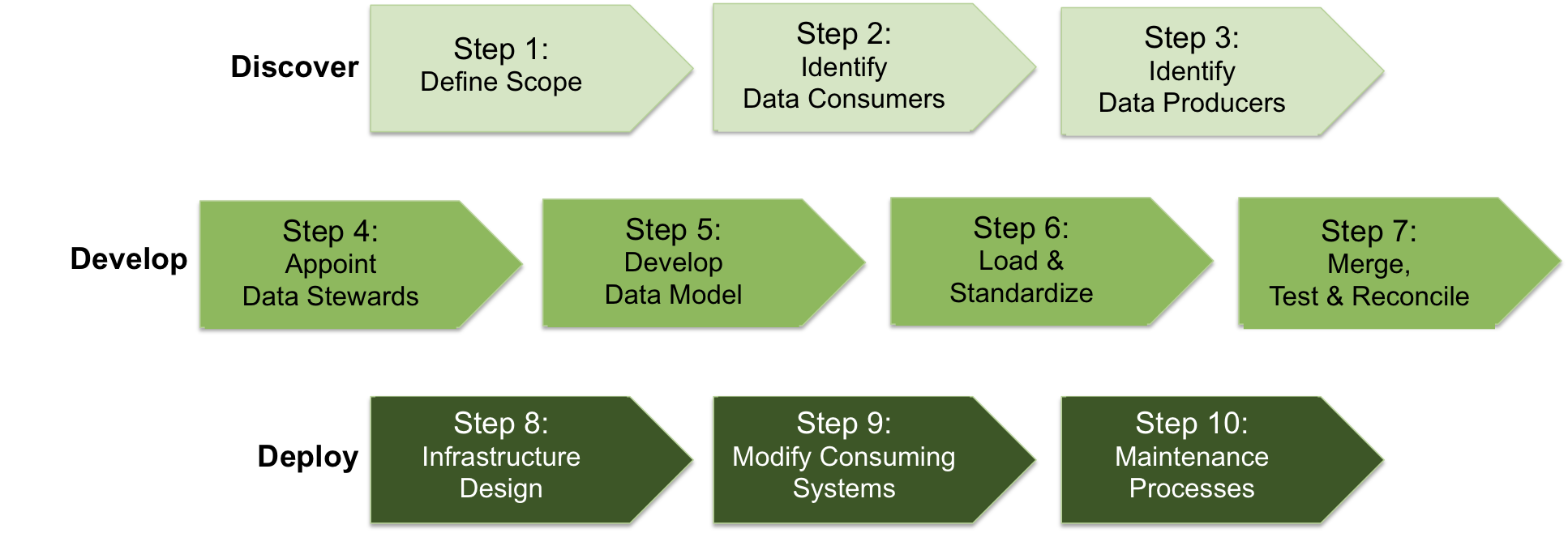

10-Step Single View Methodology

As a reminder, figure 1 shows the 10-step methodology to creating the single view.

In parts 1 and 2 of the blog series, we stepped through each of the methodology’s steps. Lets now take a look at a roadmap for the single view – something we call the Maturity Model.

Single View Maturity Model

As discussed earlier in the series, most single view projects start by offering a read-only view of data aggregated from the source systems. But as projects mature, we have seen customers start to write to the single view. Initially they may start writing simultaneously to the source systems and single view to prove efficacy – before then writing to the single view first, and propagating updates back to the source systems. The evolution path of single view maturity is shown below.

What are the advantages of writing directly to the single view?

- Real-time view of the data. Users are consuming the freshest version of the data, rather than waiting for updates to propagate from the source systems to the single view.

- Reduced application complexity. Read and write operations no longer need to be segregated between different systems. Of course, it is necessary to then implement a change data capture process that pushes writes against the single view back to the source databases. However, in a well designed system, the mechanism need only be implemented once for all applications, rather than read/write segregation duplicated across the application estate.

- Enhanced application agility. With traditional relational databases running the source systems, it can take weeks or months worth of developer and DBA effort to update schemas to support new application functionality. MongoDB’s flexible data model with a dynamic schema makes the addition of new fields a runtime operation, allowing the organization to evolve applications more rapidly.

Figure 3 shows an architectural approach to synchronizing writes against the single view back to the source systems. Writes to the single view are pushed into a dedicated update queue, or directly into an ETL pipeline or message queue. Again, MongoDB consulting engineers can assist with defining the most appropriate architecture.

Required Database Capabilities to Support the Single View

The database used to store and manage the single view provides the core technology foundation for the project. Selection of the right database to power the single view is critical to determining success or failure.

Relational databases, once the default choice for enterprise applications, are unsuitable for single view use cases. The database is forced to simultaneously accommodate the schema complexity of all source systems, requiring significant upfront schema design effort. Any subsequent changes in any of the source systems’ schema – for example, when adding new application functionality – will break the single view schema. The schema must be updated, often causing application downtime. Adding new data sources multiplies the complexity of adapting the relational schema.

MongoDB provides a mature, proven alternative to the relational database for enterprise applications, including single view projects. As discussed below, the required capabilities demanded by a single view project are well served by MongoDB:

Flexible Data Model

MongoDB's document data model makes it easy for developers to store and combine data of any structure within the database, without giving up sophisticated validation rules to govern data quality. The schema can be dynamically modified without application or database downtime. If, for example, we want to start to store geospatial data associated with a specific customer event, the application simply writes the updated object to the database, without costly schema modifications or redesign.

MongoDB documents are typically modeled to localize all data for a given entity – such as a financial asset class or user – into a single document, rather than spreading it across multiple relational tables. Document access can be completed in a single MongoDB operation, rather than having to JOIN separate tables spread across the database. As a result of this data localization, application performance is often much higher when using MongoDB, which can be the decisive factor in improving customer experience.

Intelligent Insights, Delivered in Real Time

With all relevant data for our business entity consolidated into a single view, it is possible to run sophisticated analytics against it. For example, we can start to analyze customer behavior to better identify cross-sell and upsell opportunities, or risk of churn or fraud. Analytics and machine learning must be able to run across vast swathes of data stored in the single view. Traditional data warehouse technologies are unable to economically store and process these data volumes at scale. Hadoop-based platforms are unable to serve the models generated from this analysis, or perform ad-hoc investigative queries with the low latency demanded by real-time operational systems.

The MongoDB query language and rich secondary indexes enable developers to build applications that can query and analyze the data in multiple ways. Data can be accessed by single keys, ranges, text search, graph, and geospatial queries through to complex aggregations and MapReduce jobs, returning responses in milliseconds. Data can be dynamically enriched with elements such as user identity, location, and last access time to add context to events, providing behavioral insights and actionable customer intelligence. Complex queries are executed natively in the database without having to use additional analytics frameworks or tools, and avoiding the latency that comes from ETL processes that are necessary to move data between operational and analytical systems in legacy enterprise architectures.

MongoDB replica sets can be provisioned with dedicated analytics nodes. This allows data scientists and business analysts to simultaneously run exploratory queries and generate reports and machine learning models against live data, without impacting nodes serving the single view to operational applications, again avoiding lengthy ETL cycles.

Predictable Scalability with Always-on Availability

Successful single view projects tend to become very popular, very quickly. As new data sources and attributes, along with additional consumers such as applications, channels, and users are onboarded, so demands for processing and storage capacity quickly grow.

To address these demands, MongoDB provides horizontal scale-out for the single view database on low cost, commodity hardware using a technique called sharding, which is transparent to applications. Sharding distributes data across multiple database instances. Sharding allows MongoDB deployments to address the hardware limitations of a single server, such as bottlenecks in CPU, RAM, or storage I/O, without adding complexity to the application. MongoDB automatically balances single view data in the cluster as the data set grows or the size of the cluster increases or decreases.

MongoDB maintains multiple replicas of the data to maintain database availability. Replica failures are self-healing, and so single view applications remain unaffected by underlying system outages or planned maintenance. Replicas can be distributed across regions for disaster recovery and data locality to support global user bases.

Enterprise Deployment Model

MongoDB can be run on a variety of platforms – from commodity x86 and ARM-based servers, through to IBM Power and zSeries systems. You can deploy MongoDB onto servers running in your own data center, or public and hybrid clouds. With the MongoDB Atlas service, we can even run the database for you.

MongoDB Enterprise Advanced is the production-certified, secure, and supported version of MongoDB, offering:

- Advanced Security. Robust access controls via LDAP, Active Directory, Kerberos, x.509 PKI certificates, and role-based access control to ensure a separation of privileges across applications and users. Data anonymization can be enforced by read-only views to protect sensitive, personally identifiable information. Data in flight and at rest can be encrypted to FIPS 140-2 standards, and an auditing framework for forensic analysis is provided.

- Automated Deployment and Upgrades. With Ops Manager, operations teams can deploy and upgrade distributed MongoDB clusters in seconds, using a powerful GUI or programmatic API.

- Point-in-time Recovery. Continuous backup and consistent snapshots of distributed clusters allow seamless data recovery in the event of system failures or application errors.

Single View in Action

MongoDB has been used in many single view projects. The following case studies highlight several examples.

MetLife: From Stalled to Success in 3 Months

In 2011, MetLife’s new executive team knew they had to transform how the insurance giant catered to customers. The business wanted to harness data to create a 360-degree view of its customers so it could know and talk to each of its more than 100 million clients as individuals. But the Fortune 50 company had already spent many years trying unsuccessfully to develop this kind of centralized system using relational databases.

Which is why the 150-year old insurer turned to MongoDB. Using MongoDB’s technology over just 2 weeks, MetLife created a working prototype of a new system that pulled together every single relevant piece of customer information about each client. Three months later, the finished version of this new system, called the 'MetLife Wall,' was in production across MetLife’s call centers.

The Wall collects vast amounts of structured and unstructured information from MetLife’s more than 70 different administrative systems. After many years of trying, MetLife solved one of the biggest data challenges dogging companies today. All by using MongoDB’s innovative approach for organizing massive amounts of data. You can learn more from the case study.

CERN: Delivering a Single View of Data from the LHC to Accelerate Scientific Research and Discovery

The European Organisation for Nuclear Research, known as CERN, plays a leading role in the fundamental studies of physics. It has been instrumental in many key global innovations and breakthroughs, and today operates the world's largest particle physics laboratory. The Large Hadron Collider (LHC) nestled under the mountains on the Swiss - Franco border is central to its research into origins of the universe.

Using MongoDB, CERN built a multi-data center Data Aggregation System accessed by over 3,000 physicists from nearly 200 research institutions across the globe. MongoDB provides the ability for researchers to search and aggregate information distributed across all of the backend data services, and bring that data into a single view.

MongoDB was selected for the project based on its flexible schema, providing the ability to ingest and store data of any structure. In addition, its rich query language and extensive secondary indexes gives users fast and flexible access to data by any query pattern. This can range from simple key-value look-ups, through to complex search, traversals and aggregations across rich data structures, including embedded sub-documents and arrays.

You can learn more from the case study.

Wrapping Up Part 3

That wraps up our 3-part blog series. Bringing together disparate data into a single view is a challenging undertaking. However, by applying the proven methodologies, tools, and technologies, organizations can innovate faster, with lower risk and cost.

Remember, if you want to get started right now, download the complete 10-Step Methodology to Creating a Single View whitepaper.