We recently caught up with the team at VISO TRUST to check in and learn more about their use of MongoDB and their evolving search needs (if you missed our first story, read more about VISO TRUST’s AI use cases with MongoDB on our first blog).

VISO TRUST is an AI-powered third-party cyber risk and trust platform that enables any company to access actionable vendor security information in minutes. VISO TRUST delivers the fast and accurate intelligence needed to make informed cybersecurity risk decisions at scale for companies at any maturity level.

Since our last discussion back in September 2023, VISO TRUST has adopted our new dedicated Search Nodes architecture, as well as scaled up both dense and sparse embeddings and retrieval to improve the user experience for their customers. We sat down for a deeper dive with Pierce Lamb, Senior Software Engineer on the Data and Machine Learning team at VISO TRUST to hear more about the latest exciting updates.

Check out our AI resource page to learn more about building AI-powered apps with MongoDB.

How have things been evolving at VISO TRUST? What are some of the new things you're excited about since we spoke last?

There have definitely been some exciting developments since we last spoke. Since then, we’ve implemented a new technique for extracting information out of PDF and image files that is much more accurate and breaks extractions into clear semantic units: sentences, paragraphs, and table rows. This might sound simple, but correctly extracting semantic units out of these PDF files is not an easy task by any means. We tested the entire Python ecosystem of PDF extraction libraries, cloud-based OCR services, and more, and settled on what we believe is currently state-of-the-art. For a retrieval augmented generation (RAG) system, which includes vector search, the accuracy of data extraction is the foundation on which everything else rests. Improving this process is a big win and will continue to be a mainstay of our focus.

Last time we spoke, I mentioned that we were using MongoDB Atlas Vector Search to power a dense retrieval system and that we had plans to build a re-ranking architecture. Since then I’m happy to confirm we have achieved this goal. In our intelligent question-answering service, every time a question is asked, our re-ranking architecture provides four levels of ranking and scoring to a set of possible contexts in a matter of seconds to be used by large language models (LLMs) to answer the question.

One additional exciting announcement is we’re now using MongoDB Atlas Search Nodes, which allow workload isolation when scaling search independently from our database. Previously, we were upgrading our entire database instance solely because our search needs were changing so rapidly (but our database needs were not). Now we are able to closely tune our search workloads to specific nodes and allow our database needs to change at a much different pace. As an example, retraining is much easier to track and tune with search nodes that can fit the entire Atlas Search Index in memory (which has significant latency implications).

As many have echoed recently, our usage of LLMs has not reduced or eliminated our use of discriminative model inference but rather increased it. As the database that powers our ML tools, MongoDB has become the place we store and retrieve training data, which is a big performance improvement over AWS S3. We continue to use more and more model inference to perform tasks like classification that the in-context learning of LLMs cannot beat. We let LLMs stick to the use cases they are really good at like dealing with imperfect human language and providing labeled training data for discriminative models.

You mentioned the recent adoption of Search Nodes. What impacts have you seen so far, especially given your existing usage of Atlas Vector Search?

We were excited when we heard the announcement of Search Nodes in General Availability, as the offering solves an acute pain point we’d been experiencing. MongoDB started as the place where our machine learning and data team backed up and stored training data generated by our Document Intelligence Pipeline. When the requirements to build a generative AI product became clear, we were thrilled to see that MongoDB had a vector search offering because all of our document metadata already existed in Atlas. We were able to experiment with, deploy, and grow our generative AI product right on top of MongoDB. Our deployment, however, was now serving multiple use cases: backing up and storing data created by our pipeline and also servicing our vector search needs. The latter forced us to scale the entire deployment multiple times when our original MongoDB use case didn’t require it.

Atlas Search Nodes enable us to decouple these two use cases and scale them independently. It was incredibly easy to deploy our search data to Atlas Search Nodes, requiring only a few button clicks. Furthermore, the memory requirements of vector search can now match our Atlas Search Node deployment exactly; we do not need to consider any extra memory for our storage and backup use case. This is a crucial consideration for keeping vector search fast and streamlined.

Can you go into a bit more detail on how your use cases have evolved with Vector Search, especially as it relates to dense and sparse embeddings and retrieval?

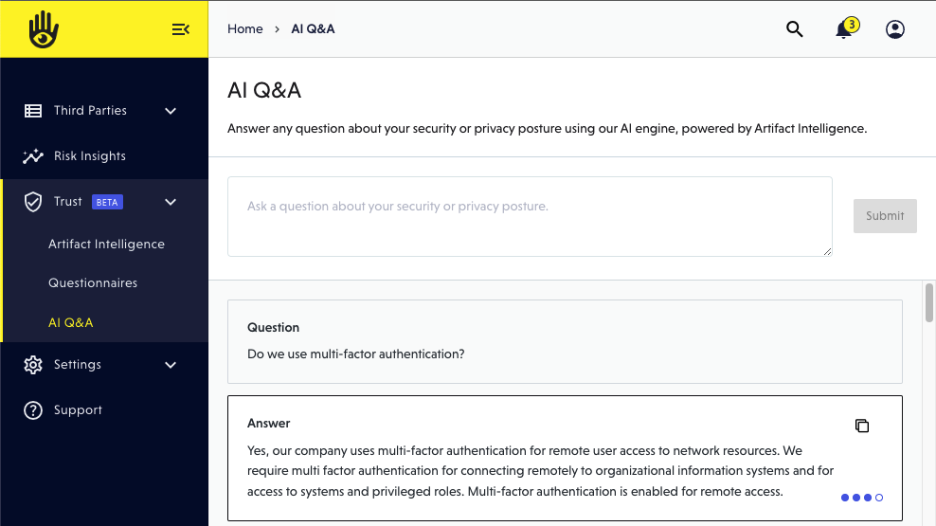

We provide a Q&A system that allows clients to ask questions of the security documents they or their vendors upload. For example, if a client wanted to know what one of their vendor’s password policies is, they could ask the system that question and get an answer with cited evidence without needing to look through the documents themselves. The same system can be used to automatically answer third-party security questionnaires our clients receive by parsing the questions out of them and answering those questions using data from our client’s documents. This saves a lot of time because answering security questions can often take weeks and involve multiple departments.

The above system relies on three main collections separated via the semantic units mentioned above: paragraphs, sentences, and table rows. These are extracted from various security compliance documents uploaded to the VISO TRUST platform (things like SOC2s, ISOs, and security policies, among others). Each sentence has a field with an ObjectId that links to the corresponding paragraph or table row for easy look-up. To give a sense of size, the sentences collection is in the order of tens of millions of documents and growing every day. When a question request enters the re-ranking system, sparse retrieval (keyword search for similarity) is performed and then dense retrieval using a list of IDs passed by the request to filter to a set of possible documents the context can come from.

The document filtering generally takes the scope from tens of millions to tens or hundreds of thousands. Sparse/dense retrieval independently scores and ranks those thousands or millions of sentences, and return the top one hundred in a matter of milliseconds to seconds. The output of these two sets of results are merged into a final set of one hundred favoring dense results unless a sparse result meets certain thresholds.

At this point, we have a set of one hundred sentences, scored and ranked by similarity to the question, using two different methods powered by Atlas Search, in milliseconds to seconds. In parallel, we pass those hundred to a multi-representational model and a cross-encoder model to provide their scoring and ranking of each sentence. Once complete, we now have four independent levels of scoring and ranking for each sentence (sparse, dense, multi-representational, and cross-encoder). This data is passed to the Weighted Reciprocal Rank Fusion algorithm which uses the four independent rankings to create a final ranking and sorting, returning the number of results requested by the caller.

How are you measuring the impact or relative success of your retrieval efforts?

The monolithic collections I spoke about above grow substantially daily, as we’ve almost tripled our sentence volume since first bringing data into MongoDB, while still maintaining the same low latency our users depend on. We needed a vector database partner that allowed us to easily scale as our datasets grow and continue to deliver millisecond-to-second performance on similarity searches. Our system can often have many in-flight question requests occurring in parallel and Atlas has allowed us to scale with the click of a button when we start to hit performance limits.

One piece of advice I would give to readers creating a RAG system using MongoDB’s Vector Search is to use ReadPreferences to ensure that retrieval queries and other reads occur primarily on secondary nodes. We use ReadPreferece.secondariesPreferred almost everywhere and this has helped substantially with the load on the system.

Lastly, can you describe how MongoDB helps you execute on your goal of helping to better make informed risk assessments?

As most people involved in compliance, auditing, and risk assessment efforts will report, these essential tasks tend to significantly slow down business transactions. This is in part because the need for perfect accuracy is extremely high and also because they tend to be human-reliant and slow to adopt new technology. At VISO TRUST, we are committed to delivering that same level of accuracy, but much faster. Since 2017, we have been executing on that vision and our generative AI products represent a leap forward in enabling our clients to assess and mitigate risk at a faster pace with increased levels of accuracy.

MongoDB has been a key partner in the success of our generative AI products by becoming the reliable place we can store and query the data for our AI-based results.

Getting started

Thanks so much to Pierce Lamb for sharing details on VISO TRUST’s AI-powered applications and experiences with MongoDB.

To learn more about MongoDB Atlas Search check out our learning byte, or if you’re ready to get started, head over to the product page to explore tutorials, documentation, and whitepapers. You’ll just be a few clicks away from spinning up your own vector search engine where you can experiment with the power of vector embeddings, RAG, and more!