As recently as 12 months ago, any mention of retrieval-augmented generation (RAG) would have left most of us confused. However, with the explosion of generative AI, the RAG architectural pattern has now firmly established itself in the enterprise landscape.

RAG presents developers with a potent combination. They can take the reasoning capabilities of pre-trained, general-purpose LLMs and feed them with real-time, company-specific data. As a result, developers can build AI-powered apps that generate outputs grounded in enterprise data and knowledge that is accurate, up-to-date, and relevant. They can do this without having to turn to specialized data science teams to either retrain or fine-tune models — a complex, time-consuming, and expensive process.

Over this series of Building AI with MongoDB blog posts, we’ve featured developers using tools like MongoDB Atlas Vector Search for RAG in a whole range of applications. Take a look at our AI case studies page and you’ll find examples spanning conversational AI with chatbots and voice bots, co-pilots, threat intelligence and cybersecurity, contract management, question-answering, healthcare compliance and treatment assistants, content discovery and monetization, and more.

Further reflecting its growing adoption, Retool’s State of AI survey from a couple of weeks ago shows Atlas Vector Search earning the highest net promoter score (NPS) among developers.

Check out our AI resource page to learn more about building AI-powered apps with MongoDB.

In this blog post, I’ll highlight three more interesting and novel use cases:

-

Unlocking geological data for better decision-making and accelerating the path to net zero at Eni

-

Video and audio personalization at Potion

-

Unlocking insights from enterprise knowledge bases at Kovai

Eni makes terabytes of subsurface unstructured data actionable with MongoDB Atlas

Based in Italy, Eni is a leading integrated energy company with more than 30,000 employees across 69 countries. In 2020, the company launched a strategy to reach net zero emissions by 2050 and develop more environmentally and financially sustainable products.

Sabato Severino, Senior AI Solution Architect for Geoscience at Eni, explains the role of his team: “We’re responsible for finding the best solutions in the market for our cloud infrastructure and adapting them to meet specific business needs.”

Projects include using AI for drilling and exploration, leveraging cloud APIs to accelerate innovation, and building a smart platform to promote knowledge sharing across the company. Eni’s document management platform for geosciences offers an ecosystem of services and applications for creating and sharing content. It leverages embedded AI models to extract information from documents and stores unstructured data in MongoDB.

The challenges for Severino’s team were to maintain the platform as it ingested a growing volume of data — hundreds of thousands of documents and terabytes of data — and to enable different user groups to extract relevant insights from comprehensive records quickly and easily.

With MongoDB Atlas, Eni users can quickly find data spanning multiple years and geographies to identify trends and analyze models that support decision-making within their fields. The platform uses MongoDB Atlas Search to filter out irrelevant documents while also integrating AI and machine learning models, such as vector search, to make it even easier to identify patterns.

“The generative AI we’ve introduced currently creates vector embeddings from documents, so when a user asks a question, it retrieves the most relevant document and uses LLMs to build the answer,” explains Severino.

“We’re looking at migrating vector embeddings into MongoDB Atlas to create a fully integrated, functional system. We’ll then be able to use Atlas Vector Search to build AI-powered experiences without leaving the Atlas platform — a much better experience for developers.”

Read the full case study to learn more about Eni and how it is making unstructured data actionable.

Video personalization at scale with Potion and MongoDB

Potion enables salespeople to personalize prospecting videos at scale. Already over 7,500 sales professionals at companies including SAP, AppsFlyer, CaptivateIQ, and Opensense are using SendPotion to increase response rates, book more meetings, and build customer trust.

All a sales representative needs to do is record a video template, select which words need to be personalized, and let Potion’s audio and vision AI models do the rest. Kanad Bahalkar, co-founder and CEO at Potion explains:

“The sales rep tells us what elements need to be personalized in the video — that is typically provided as a list of contacts with their name, company, desired call-to-action, and so on. Our vision and audio models then inspect each frame and reanimate the video and audio with personalized messages lip-synced into the stream. Reanimation is done in bulk in minutes. For example, one video template can be transformed into over 1,000 unique video messages, personalized to each contact.”

Potion’s custom generative AI models are built with PyTorch and TensorFlow, and run on Amazon Sagemaker. Describing their models, Kanad says “Our vision model is trained on thousands of different faces, so we can synthesize the video without individualized AI training. The audio models are tuned on-demand for each voice.”

And where does the data for the AI lifecycle live? “This is where we use MongoDB Atlas,” says Kanad.

“We use the MongoDB database to store metadata for all the videos, including the source content for personalization, such as the contact list and calls to action. For every new contact entry created in MongoDB, a video is generated for it using our AI models, and a link to that video is stored back in the database. MongoDB also powers all of our application analytics and intelligence. With the insights we generate from MongoDB, we can see how users interact with the service, capturing feedback loops, response rates, video watchtimes, and more. This data is used to continuously train and tune our models in Sagemaker."

On selecting MongoDB Kanad says, “I had prior experience of MongoDB and knew how easy and fast it was to get started for both modeling and querying the data. Atlas provides the best-managed database experience out there, meaning we can safely offload running the database to MongoDB. This ease-of-use, speed, and efficiency are all critical as we build and scale the business."

To further enrich the SendPotion service, Kanad is planning to use more of the developer features within MongoDB Atlas. This includes Atlas Vector Search to power AI-driven semantic search and RAG for users who are exploring recommendations across video libraries. The engineering team is also planning on using Atlas Triggers to enable event-driven processing of new video content.

Potion is a member of the MongoDB AI Innovators program. Asked about the value of the program, Kanad responds, “Access to free credits helped support rapid build and experimentation on top of MongoDB, coupled with access to technical guidance and support."

Bringing the power of Vector Search to enterprise knowledge bases

Founded in 2011, Kovai is an enterprise software company that offers multiple products in both the enterprise and B2B SaaS arena. Since its founding, the company has grown to nearly 300 employees serving over 2,500 customers.

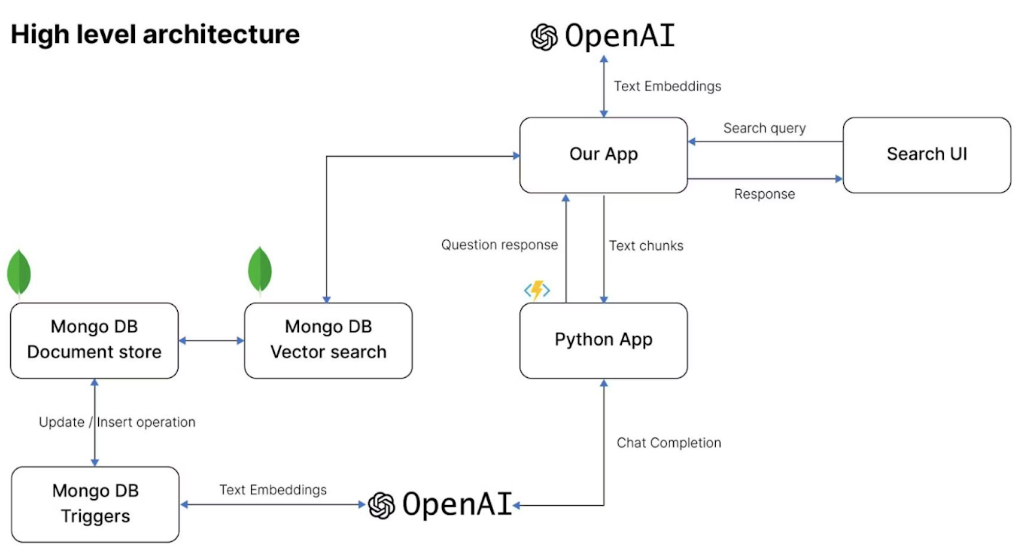

One of Kovai’s key products is Document360, a knowledge base platform for SaaS companies looking for a self-service software documentation solution. Seeing the rise of GenAI, Kovai began developing its AI assistant, “Eddy.” The assistant provides answers to customers' questions utilizing LLMs augmented by retrieving information in a Document360 knowledge base.

During the development phase Kovai’s engineering and data science teams explored multiple vector databases to power the RAG portion of the application. They found the need to sync data between its system-of-record MongoDB database and a separate vector database introduced inaccuracies in answers from the assistant.

The release of MongoDB Atlas Vector Search provided a solution with three key advantages for the engineers:

-

Architectural simplicity: MongoDB Vector Search's architectural simplicity helps Kovai optimize the technical architecture needed to implement Eddy.

-

Operational efficiency: Atlas Vector Search allows Kovai to store both knowledge base articles and their embeddings together in MongoDB collections, eliminating “data syncing” issues that come with other vendors.

-

Performance: Kovai gets faster query response from MongoDB Vector Search at scale to ensure a positive user experience.

Atlas Vector Search is robust, cost-effective, and blazingly fast!

Said Saravana Kumar, CEO, Kovai, when speaking about his team's experience

Specifically, the team has seen the average time taken to return three, five, and 10 chunks between two and four milliseconds, and if the question is a closed loop, the average time reduces to less than two milliseconds.

You can learn more about Kovai’s journey into the world of RAG in the full case study.

Getting started

As the case studies in our Building AI with MongoDB series demonstrate, retrieval-augmented generation is a key design pattern developers can use as they build AI-powered applications for the business. Take a look at our Embedding Generative AI whitepaper to explore RAG in more detail.