Since announcing MongoDB Atlas Vector Search preview availability back in June, we’ve seen a rapid adoption from developers building a wide range of AI-enabled apps. Today we're going to talk to one of these customers.

VISO TRUST puts reliable, comprehensive, actionable vendor security information directly in the hands of decision-makers who need to make informed risk assessments. The company uses a combination of state-of-the-art models from OpenAI, Hugging Face, Anthropic, Google, and AWS, augmented by vector search and retrieval from MongoDB Atlas.

We sat down with Pierce Lamb, Senior Software Engineer on the Data and Machine Learning team at VISO TRUST to learn more.

Tell us a little bit about your company. What are you trying to accomplish and how that benefits your customers or society more broadly?

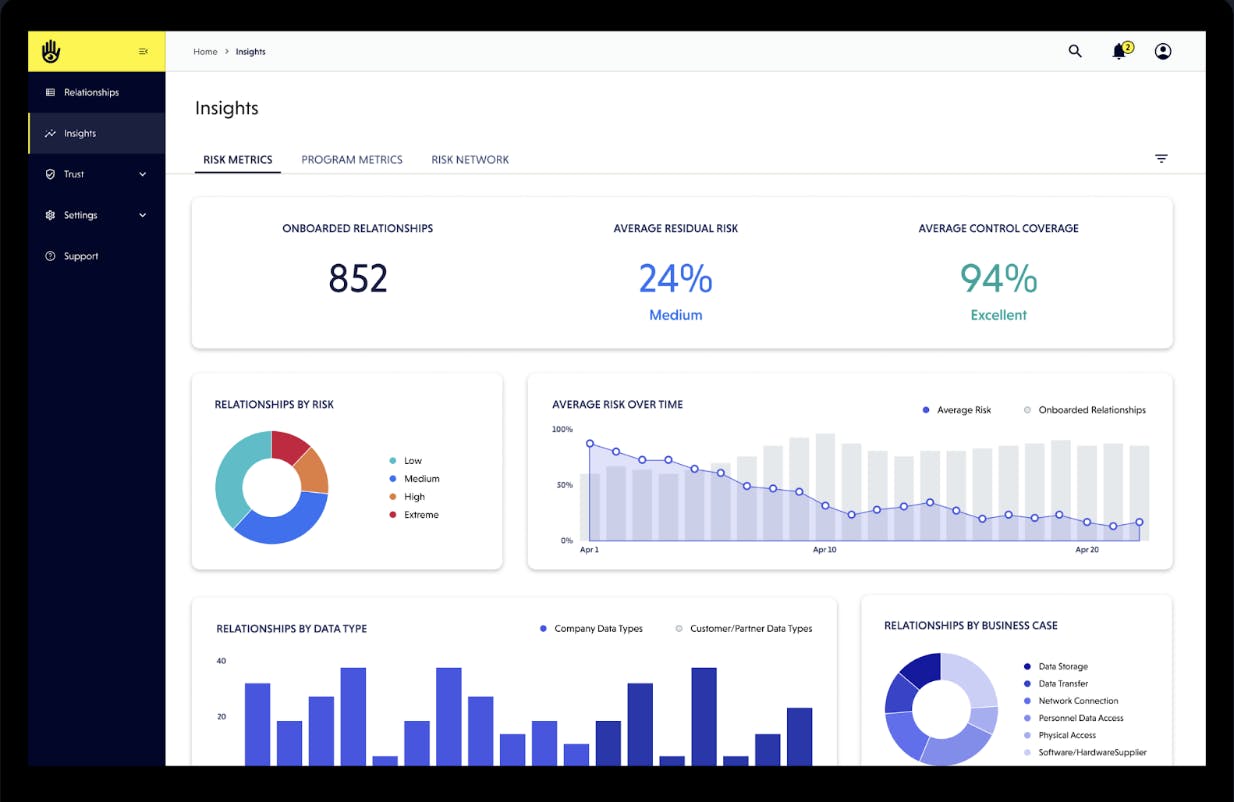

VISO TRUST is an AI-powered third-party cyber risk and trust platform that enables any company to access actionable vendor security information in minutes. VISO TRUST delivers fast and accurate intelligence needed to make informed cybersecurity risk decisions at scale for companies at any maturity level.

Our commitment to innovation means that we are constantly looking for ways to optimize business value for our customers. VISO TRUST ensures that complex business-to-business (B2B) transactions adequately protect the confidentiality, integrity and availability of trusted information. VISO TRUST’s mission is to become the largest global provider of cyber risk intelligence and become the intermediary for business transactions. Through the use of VISO TRUST, customers will reduce their threat surface in B2B transactions with vendors and thereby reduce the overall risk posture and potential security incidents like breaches, malicious injections and more. Today VISO TRUST has many great enterprise customers like InstaCart, Gusto, and Upwork and they all say the same thing: 90% less work, 80% reduction in time to assess risk and near 100% vendor adoption. Because it’s the only approach that can deliver accurate results at scale, for the first time, customers are able to gain complete visibility into their entire third party populations and take control of their third party risk.

Describe what your application does and what role AI plays in it?

The VISO TRUST Platform approach uses patented, proprietary machine learning and a team of highly qualified third party risk professionals to automate this process at scale.

Simply put, VISO TRUST automates vendor due diligence and reduces third party at scale. And security teams can stop chasing vendors, reading documents, or analyzing spreadsheets.

VISO TRUST Platform easily engages third parties, saving everyone time and resources. In a 5 minute web based session third parties are prompted to upload relevant artifacts of the security program that already exist and our supervised AI – we call Artifact Intelligence – does the rest.

Security artifacts that enter VISO’s Artifact Intelligence pipeline interact with AI/ML in three primary ways. First, VISO deploys discriminator models that produce high confidence predictions about features of the artifact, for example one model performs artifact classification, another detects organizations inside the artifact, another predicts which pages are likely to contain security controls and more. Our modules references a comprehensive set of over 25 security frameworks and uses document heuristics and natural language processing to analyze any written material and extract all relevant control information.

Secondly, artifacts have text content parsed out of them in the form of sentences, paragraphs, headers, table rows and more; these text blobs are embedded and stored in MongoDB Atlas to become part of our dense retrieval system. This dense retrieval system performs retrieval-augmented generation (RAG) using MongoDB features like Atlas Vector Search to provide ranked context to large language model (LLM) prompts.

Thirdly, we use RAG results to seed LLM prompts and chain together their outputs to produce extremely accurate factual information about the artifact in the pipeline. This information is able to provide instant intelligence to customers that previously took weeks to produce.

VISO TRUST’s risk model analyzes your risk and delivers a complete assessment that provides everything you need to know to make qualified risk decisions about the relationship. In addition, the platform continuously monitors and re-assesses third-party vendors to ensure compliance.

What specific AI/ML techniques, algorithms, or models are utilized in your application?

For our discriminator models, we research the state-of-the-art pretrained models (typically narrowed by those contained in HuggingFace’s transformers package) and perform fine-tuning of these models using our dataset.

For our dense retrieval system, we use MongoDB Atlas Vector Search which internally uses the Hierarchical Navigable Small Worlds algorithm to retrieve similar embeddings to embedded text content. We have plans to perform re-ranking of these results as well.

For our LLM system, we have experimented with GPT3.5-turbo, GPT4, Claude 1 & 2, Bard, Vertex and Bedrock. We blend a variety of these based on our customers accuracy, latency, and security needs.

Can you describe other AI technologies used in your application stack?

Some of the other frameworks we use are HuggingFace transformers, evaluate, accelerate, and Datasets, PyTorch, WandB, and Amazon Sagemaker. We have a library for ML experiments (fine-tuning) that is custom-built, a library for workflow orchestration that is custom built, and all of our prompt engineering is custom built.

Why did you choose MongoDB as part of your application stack? Which MongoDB features are you using and where are you running MongoDB?

The VISO TRUST Platform relies on effective solutions and tools like MongoDB's distinctive attributes to fulfill specific objectives. MongoDB supports our platform's mechanism to engage third parties efficiently, employing both AI and human oversight to automate the assessment of security artifacts at scale.

The fundamental value proposition of MongoDB – a robust document database – is why we originally chose it. It was originally deployed as a storage/retrieval mechanism for all the factual information our artifact intelligence pipeline produces about artifacts. While it still performs this function today, it has now become our “vector/metadata database.”

MongoDB executes fast ranking of large quantities of embedded text blobs for us while Atlas provides us with all ease-of-use of a cloud-ready database. We use both the Atlas search index visualization, the query profiler visualization daily. Even just the basic display of a few documents in collections often saves time. Finally, when we recently backfilled embeddings across one of our MongoDB deployments, Atlas would automatically provision more disk space for large indexes without us needing to be around which was incredibly helpful.

What are the benefits you've achieved by using MongoDB?

I would say there are two primary benefits that have greatly helped us with respect to MongoDB and Atlas. First, MongoDB was already a place where we were storing metadata about artifacts in our system; with the introduction of Atlas Vector Search now we have a comprehensive vector/metadata database – that’s been battle tested over a decade – that solves our dense retrieval needs. No need to deploy a new database we have to manage and learn. Our vectors and artifact metadata can be stored right next to each other.

Second, Atlas has been helpful at making all the painful parts of database management easy. Creating indexes, provisioning capacity, alerting slow queries, visualizing data and much more has saved us time and allowed us to focus on more important things.

What are your future plans for new application and how does MongoDB fit into them?

Retrieval-augmented generation is going to continue to be a first-class feature of our application. In this regard, the evolution of Atlas Vector Search and its ecosystem in MongoDB will be highly relevant to us. MongoDB has become the database our ML team uses, so as our ML footprint expands, our use of MongoDB will expand.

Getting started

Thanks so much to Pierce for sharing details on VISO TRUST’s AI-powered applications and experiences with MongoDB.

The best way to get started with Atlas Vector Search is to head over the product page. There you will find tutorials, documentation, and whitepapers along with the ability to sign-up to MongoDB Atlas. You’ll just be a few clicks away from spinning up your own vector search engine where you can experiment with the power of vector embeddings and RAG. We’d love to see what you build, and are eager for any feedback that will make the product even better in the future!